I need to change the root password on all my hosts but I have a small problem: some hosts have older md5 hashed passwords and the newer ones use the more secure SHA-512 hash. If I did not care about the different hashes and wanted to have SHA-512 across the board I would do a very simple manifest entry to make this happen: Problem is I want to replace the old md5 hashes with new md5 hashes and the old SHA-512 with new SHA-512; not something that Puppet supports very easily. To do this we are going to build a new module with a Custom Fact written in Ruby. First off I need to explain some things if you are new to Puppet.

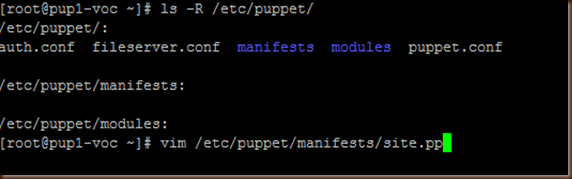

- A module is stored under /etc/puppet/modules and is called via an include declaration in the site.pp Master Manifest

- A module has it's own Manifest called init.pp under /etc/puppet/modules/<module_name>/manifests

- Inside the init.pp is a class that MUST be named the same as your folder structure. Example: if the folder at /etc/puppet/modules/<module_name> is named "rootpass" then your class declaration must be "class rootpass {...."

- A module is very powerful and the functionality is written in Ruby

Now that we have those out of the way lets start. If you are doing this your self here is the folder structure to make life easier:

Let's visit each component a piece at a time

1. Custom Fact - sha512rootpass.rb. Puppet if Statements can be a bit tricky (see http://docs.puppetlabs.com/learning/variables.html) so in this case I needed a Custom Fact that checked to see if the root password was a SHA-512 hash which is indicated by it starting with "$6$" (md5 is $1$). If the root password is indeed a SHA-512 hash then the variable sha512rootpass will return with a "true" value. This functionality is delivered by Ruby Facter. For more information take a look at http://docs.puppetlabs.com/guides/custom_facts.html. My custom fact is silly simple, it just greps the shadow file for "root:$6$*" and if it's there then returns "true" which means that root has a SHA-512 hashed password.

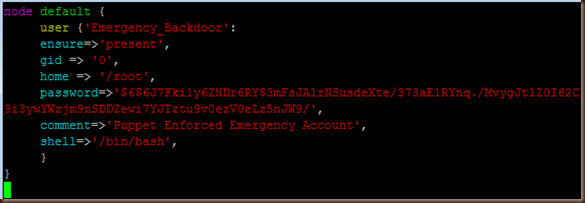

2. New Class - init.pp. The logic for the operation that we actually want to run is located in the init.pp file. Here is where we define our class (reminder, it needs to be the same as you top level folder name). This one basically says "If root is using a SHA-512 password hash (defined by $sha512rootpass = true) replace it with this new one. If not then assume it's md5 and replace it with this new md5 hash.

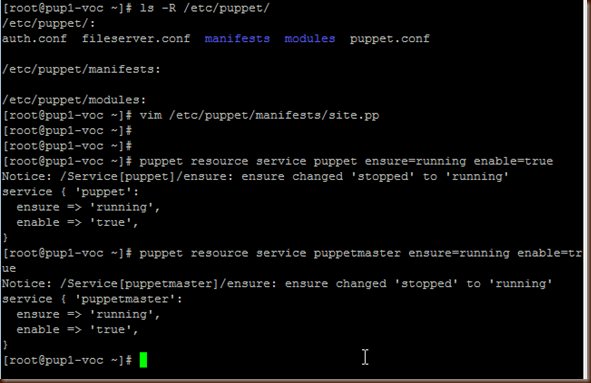

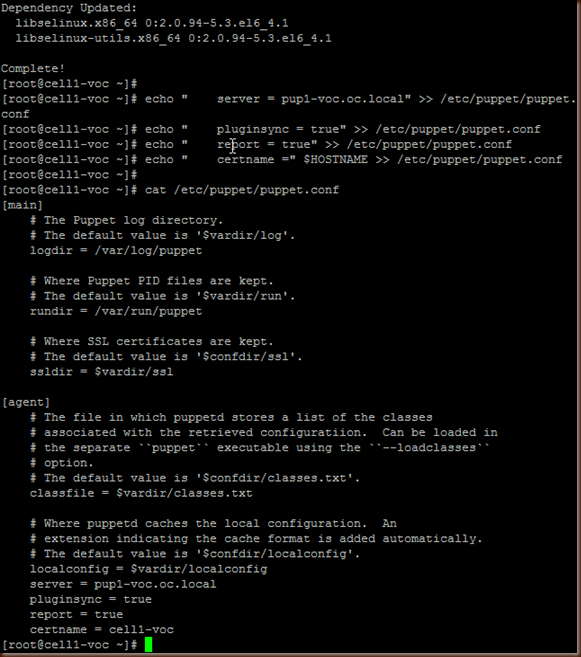

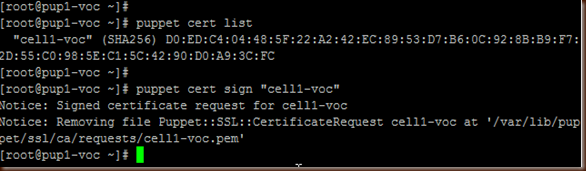

Now we need to tell Puppet what servers to apply this to and this is done by modifying the site.pp Manifest on the Puppet Master. For now I'm going to apply it to all my nodes and so I just add it to my default. If you wanted you can add a new section that says "node <hostname> {include rootpass}" and it would be applied just to that host.

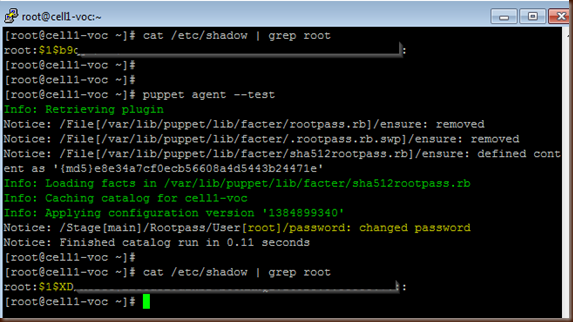

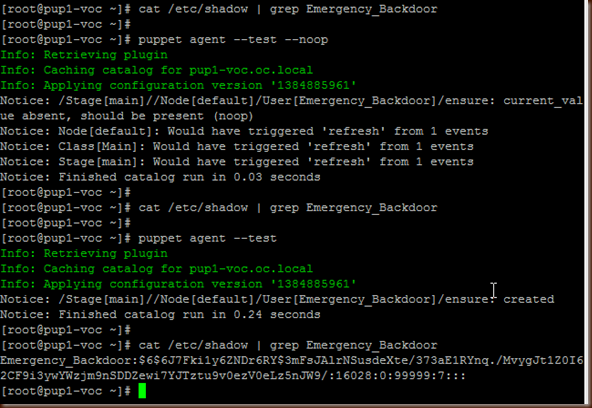

Now lets test it on an agent box that has an md5 hashed password and a box that has a SHA-512 password. Our older box with md5 is the first up to bat.....

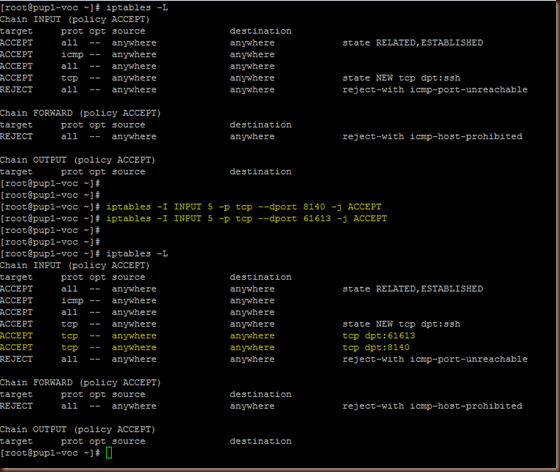

As you can see the password was a md5 ($1$) and was changed appropriately. Next let's look at a box with SHA-512.

As you can see the old password was a SHA-512 hash and has been replaced with the new SHA-512 hash. Success!